What is blk-mq?

I only heard about blk-mq and scsi-mq a few months ago. Blk-mq stands for block multi-queue and while it isn’t a new term for those who follow what’s new in the Linux kernel, it is relatively a newcomer to the Enterprise Linux distributions. The idea is to improve the host I/O stack when dealing with fast storage media such as NVMe flash. Let’s take a closer look at how it is done and what are the benefits.

The Linux block device I/O layer was designed many years ago with focus on optimizing Hard Disk Drives (HDD) performance. As discussed in much more details in these references: [1] and [2], it was based on a single queue (SQ) for I/O submission per each block device, a single locking mechanism shared by all CPU cores whenever I/Os were submitted, removed, or reordered in the submission queues, and inefficient hardware interrupt handling to name a few drawbacks.

With the increased use of Non-Volatile Memory (NVM) as primary storage (flash drives, or SSD storage), and soon also Storage Class Memory (SCM), the I/O bottleneck shifted from the storage media to the host I/O layer. All this opened the door to a new design: enter block multi-queue (MQ), or as it is often referenced: blk-mq. Btw, scsi-mq is the implementation of blk-mq with SCSI type block devices, i.e. /dev/sd (which are common in Fibre Channel based SAN storage).

Blk-mq introduces a two-layer design in which each block device gets multiple software I/O submission queues (one per CPU core), that eventually fan into a single queue for the device driver. The queue handling is based on FIFO ordering handled by the core submitting the I/Os, and no longer requires the interrupts or shared lock mechanism. For the time being it leaves out I/O reordering (scheduler) as flash media doesn’t care if an I/O is random or sequential. However, I/O scheduling may be introduced again at a later time, for example, to coalesce smaller I/Os into larger ones.

Figure 1: a MQ block layer (left) vs. SQ block layer (right) [2]

So, is it a good thing? should we all move to use blk-mq?

blk-mq and NVMe Flash SAN Storage

The plot thickens when you consider the difference between a host-resident NVMe flash card vs. a host connected via SAN to Enterprise Storage such as VMAX All Flash or PowerMax (backend NVMe flash) . A host NVMe flash card in itself is a single device and a single path, so adding software queues and optimizations with MQ makes a lot of sense.

But what about Enterprise Storage?

In the Enterprise Storage space it is common to use multipathing software to access storage devices, so in spite of the default behavior of a single queue per block device, the I/Os are sent back and forth via multiple paths.

Also, unlike a single NVMe flash card on the host, with Enterprise Storage we tend to use multiple storage devices for our databases. For example, an Oracle ASM disk group that stripes the database’s data at the host level across multiple LUNs (devices), and the VMAX All Flash or PowerMax that stripes the devices’ data across its backend pools of flash media.

With multiple paths, multiple devices, and striping both at the host and storage, we already have high I/O concurrency. Do we still benefit from MQ? Well, let’s run some tests and fine out!

Enabling blk-mq

Before we start testing, we need to enable MQ. While recent Linux kernels enable it by default for block devices such as /dev/nvme, when it comes to SCSI devices such as /dev/sd, it isn’t enabled by default, in case the devices are still based on spinning media and therefore can benefit more from SQ with an I/O scheduler.

To enable MQ, you can use the grubby command to update the parameters directly on a specific kernel. For example, first check what are the available kernels:

# grubby --info=ALL

index=0

kernel=/boot/vmlinuz-4.14.35-2025.404.1.1.el7uek.x86_64

args="ro crashkernel=auto rhgb quiet"

root=UUID=94a90939-3694-438b-bb91-2eedf799829e

initrd=/boot/initramfs-4.14.35-2025.404.1.1.el7uek.x86_64.img

title=Oracle Linux Server 7.8, with Unbreakable Enterprise Kernel 4.14.35-2025.404.1.1.el7uek.x86_64

...

Then, we can modify a specific kernel to update its arguments:

# grubby --update-kernel=/boot/vmlinuz-4.14.35-2025.404.1.1.el7uek.x86_64 --args="scsi_mod.use_blk_mq=1 dm_mod.use_blk_mq=y"

Remember to reboot for the new parameters to take affect.

The first parameter, scsi_mod.use_blk_mq=1 enables MQ for SCSI type block devices at the kernel level (where 0 will disable it). The second parameter, dm_mod.use_blk_mq=y enables MQ for device-mapper (DM), Linux native multipathing. Setting the parameter to ‘n‘ will disable it for DM. As you can see, it is easy enough to enable or disable MQ if you want to try it. Don’t forget to reboot after each change.

That’s a good time to talk about the choice of Linux distribution and multipathing software when using MQ. At this time PowerPath supports MQ only on SLES 12, and will enable this support automatically if MQ is enabled in the kernel. I’m used to working with RHEL or OEL so I used DM, the native Linux multipathing software.

RHEL 7.4 is based on Linux kernel 3.10 and while it allowed me to enable MQ, after reboot I saw no SAN storage devices! Inspection of /var/log/messages showed that DM rejected each and every one of my /dev/sd storage devices as not valid for MQ. Strange. That lead me to use OEL 7.4 with UEK as it is based on kernel 4.1.12. Indeed, it worked without an issue and all the VMAX All Flash and PowerMax devices were accounted for.

Note that with RHEL 8.x and OL 8.x blk-mq has become default, likely due to the increased use of All Flash storage.

How do we know if blk-mq is enabled?

# cat /sys/module/scsi_mod/parameters/use_blk_mq Y # cat /sys/module/dm_mod/parameters/use_blk_mq Y

Another indication is that if we look for the I/O scheduler, there is none when working with MQ:

cat /sys/class/block/sd*/queue/scheduler | head none none none ...

Blk-mq Tests with Oracle

Test environment

Hosts: A 4-node Oracle RAC was created for the tests:

- 2 x Dell PowerEdge R730 servers with 2 Intel Xeon ES-2690 v4 @ 2.66GHz (total of 28 cores), 128GB RAM. 2 x dual port 16Gbit HBAs (total of 4 initiators)

- 2 x Cisco UCS C240M3 servers with 2 Intel Xeon E5-2680 v2 @ 2.80GHz (total of 20 cores), 96GB RAM. 2 x dual port 16Gbit HBAs (total of 4 initiators)

In the 1 server tests only the first cluster node was used (one of the Dell servers), and in the 3 servers tests the first 3 nodes were used (the two Dells and one of the Cisco servers).

Note: Adding the 4th node to the SLOB OLTP workload didn’t provide additional performance benefits and therefore the 4th node was not used. Although not shown here, when Oracle DSS workload (sequential reads) was tested, all 4 nodes were necessary to drive close to 12GB/sec read bandwidth.

Storage: PowerMax 8000 with a single brick (one engine) and 1TB raw cache (mirrored). It’s a very small configuration as PowerMax 8000 can scale up to 8 bricks in a two-rack.

Storage group configuration (device grouping for ease of management) was set as: data_sg with 16 x 200GB devices for the data files, redo_sg with 8 x 200GB devices for the redo logs, and grid_sg with 3 x 40GB devices for Oracle GRID infrastructure.

Zoning: Zoning was set at 16 paths per device. In other words, each of the 4 HBA initiators (per server) was zoned to 4 PowerMax FA ports. Heck, let’s get lots of multipathing I/O concurrency!

Database: Oracle 4-node RAC database and ASM release 12.2. ASM was using the following disk groups: +DATA (based on data_sg), +REDO (based on redo_sg), and +GRID (based on grid_sg). Some of the tests were performed with only one node, and some with three nodes for comparison.

Workload: SLOB 2.4 with 80 users and a 2TB database size, 25% update (OLTP), light redo generation, and 30 min of steady-state run for each test.

Note: DSS workload was also tested (parallel query, or sequential-read of a large Oracle fact table with many partitions). The bandwidth results with MQ were slightly better than without, though to keep this blog short it is focused on OLTP workload.

Tests cases

The tests focused on 4 OLTP use cases:

- One server SLOB tests: when using a single server, the storage utilization is low (the host is the bottleneck), and we can more clearly see database performance differences based on just the host kernel optimizations, namely, MQ enabled or disabled.

- Three servers SLOB tests: when using three servers, the storage utilization was high (compared to the servers). Will host kernel related differences (MQ) still show benefit in this case?

Each of the above test cases (single server, or three servers) was run in two ways:

- SLOB workload randomly accessing the whole database (2TB in size). The outcome was a storage read-hit rate of 6%. This “read-miss” workload is a worst case scenario as PowerMax cache algorithms tend to deliver high read-hit ratio. Still, it is good to see the behavior under such conditions.

- SLOB workload behaving more like a ‘real-world’ workload, where some of the database is considered ‘hot’ and the rest ‘cold’. The queries accessed the hot data. The outcome was a storage read-hit rate of 60%, which is closer to how databases really behave.

In each test case both the database AWR metrics and PowerMax Unisphere performance metrics were reviewed.

Test results

One server, 6% storage read-hit

As can be seen in the table below, very significant benefits were noticed with MQ enabled. Oracle AWR shows IOPS improvement of 23%, and data files read latency improvement of 26% (in spite of the IOPS increase). We can also see a 26% increase in AWR executions and user commits per second, which indicates a higher transaction rate.

Unisphere also shows significant storage performance benefits, primarily in IOPS and data file write latency. Although we’re using ‘light’ SLOB redo generation, there is a small improvement in the redo log bandwidth, which is also an indication of higher database transaction rate.

One interesting thing to note is that when MQ was disabled, it took SLOB 4 threads to achieve the 186,214 data IOPS we’re seeing (80 users x 4 threads = 320 slob processes). However, when MQ was enabled, SLOB achieved higher IOPS (228,886) with just 2 threads (80 users x 2 threads = 160 slob processes). That means that MQ not only provided higher performance, but at much better efficiency (half of the database user processes).

One server, 60% storage read-hit

Again, we can see in the single server run significant benefits when MQ was enabled, very similar to the benefits from the previous test case. Since the server was the ‘bottleneck’ in this single-server run, the storage read-hit ratio improvement from 6% to 60% didn’t make much of a difference.

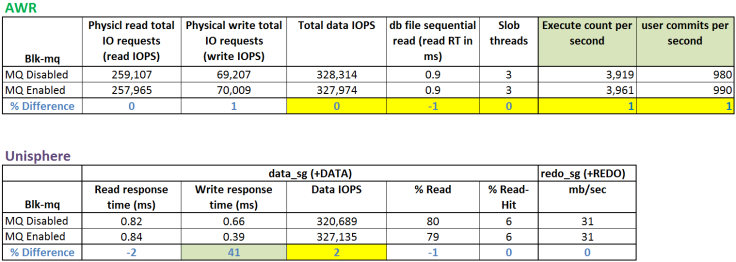

Three servers, 6% storage read-hit

As can be seen in the table below, when all three servers were running, they achieved a very respectable performance numbers with our single engine PowerMax (AWR is showing close to 330 thousands data IOPS @ 0.9ms read latency). However, MQ didn’t provide much of a difference. The conclusion is that when the storage is highly utilized, the MQ improvements in the host stack didn’t translate to database performance improvement. In fact, the results are so close that we can say that MQ didn’t affect the performance in this case.

Three servers, 60% storage read-hit

In this last test case, with 60% storage cache read-hit ratio, we achieved even better performance than the previous test case. AWR is showing close to 477 thousands data IOPS @ 0.6ms read latency without MQ, and 494 thousands IOPS @ 0.6ms read latency with MQ.

That’s near half a million Oracle data files IOPS in this small test environment!

While MQ benefits in this test are only 4% in IOPS, latency, and transaction rate, they are still noticeable.

Conclusion

MQ is relatively a new comer to the Linux Enterprise kernels and it is actively being developed and improved. Yet, we can already see very significant benefits for enabling it, especially considering a per-host performance and utilization concerns. It allows the server a higher IO rate and better overall performance, at significantly lower utilization (processes driving the workload).

In the Oracle space, where licenses are based on core count, improved host utilization goes a long way!

However, in cases where the storage is highly utilized, improving the host stack doesn’t carry the same performance advantages. Still, consider that many tasks in Oracle take place on a single server, even if it is part of a cluster (for example, RMAN backup/recovery, batch loads, etc.).

Other considerations include the fact that PowrePath support is limited to SLES 12 (consult PP support matrix for changes or details), and that at least in my tests, Linux native multipathing required kernel 4.x to accept my VMAX All Flash and PowerMax storage devices as MQ capable.

My recommendation? If you can – test it! I think you might see significant benefits and those are likely to improve further as blk-mq matures. Feel free to share your own experience.

Awesome post, my old friend.

BTW, no ref to SLOB? 🙂

LikeLike

Hey Kevin. SLOB is all over the post! Won’t be the same without it. See references in the “test cases” section. Link is under the “workload” section. Btw, can’t wait to try pgio… ;0)

LikeLike

Oops…there is a ref! Ignore my noise! 🙂

LikeLike

No worries. Good to hear from you!

LikeLike